DMSC Devlog: Week 8 - Video Synthesis

Much of our class up to this week had focused on generative art and environments, in which the computer creates “random” visuals from a human-designed set of rules. This week took a slightly different approach to computer art with the introduction of Hydra, a live-coding video synthesizer. Inspired by analog modular synthesis, Hydra has a human-designed set of rules already written, leaving the programmer to code their own visuals with shapes, oscillators, and effects. This was the first step towards hosting our own algorave - think pumping dance music and intense lighting effects performed by writing code in real time.

While I never got the hang of editing my code live and my computer struggled to keep up with more elaborate patterns, I had a lot of fun putting the fancy shapes and colors together. Being limited by weaker hardware helped me get more creative with sketches that were compelling but not too complicated.

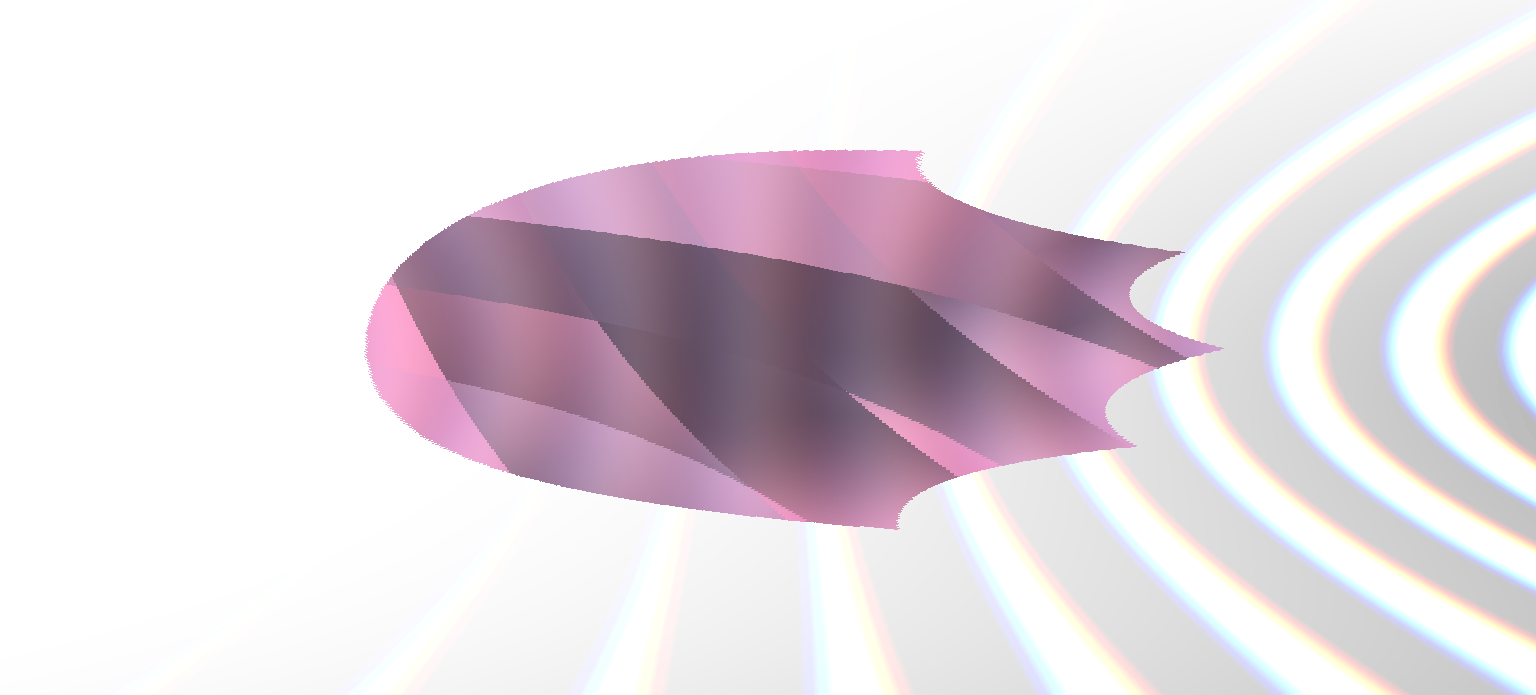

These three sketches represent the range I could capture with the synth, including using sound (sketch 1, best synced to Magdalena Bay’s “Image”) or other oscillators (sketch 3) to modulate an image. While the whale-surfacing vibes of sketch 2 were cool to design and the pulsing high-contrast shapes in sketch 1 are always somewhat compelling, I still feel the most satisfied by sketch 3’s “beating heart in a ribcage” imagery - in part because I can’t believe how well it runs on my computer.

//sketch 1 - using an external device as your browser's audio input is the best solution I could come up with but audio modulation still seems finicky

a.setBins(5)

a.show()

bpm = 61

osc(2,0.1).color(6,-3,-2).rotate([0,0,4],0.2).scroll([6,2],0,0,0.4)

.rotate(5,0.3)

.repeat(6,2)

.scroll(4,0,0.02,0.06)

.colorama(0.07)

.sub(osc([1,600,30,7,10000])

.color([4,2],[2,5],[7])

.rotate([0,Math.PI],-0.2))

.rotate(2)

.mask(shape([2,3,4,5,6]).modulateScale(noise(3), ()=>a.fft[0]*5 ).color([1,-10,10,-1],[1,-10,10-1,],[1,-10,10,-1]))

.out();

//sketch 2 - looks better on the platform than in picture 1

osc(70, () => Math.sin(time/8)*0.0004, 1.14)

.color(1.33,0.75,-0.68)

.luma(0.810,0.62)

.diff(shape(4, 0.2 ,0.7)

.rotate(Math.PI/4 + 0.55)

.repeat(2,2)

.scrollX(-0.05,-0.1)

.rotate(-0.55)

.sub(osc(5,0.4))

.luma(0.67,0.4)

)

.sub(osc(2,0.7,1.23).luma(0.36,0.2))

.out();

//sketch 3 - see pictures 2 & 3

bpm = 55;

gradient(2).color(1.07,-1.08,1.28).luma(0.06,0.64).rotate(0,0.5)

.out(o0);

// ---outside heart

osc(80,0.05,0.37).sub(src(o0).thresh(0.28,0.45)).modulateScale(

osc(Math.PI, 0, Math.PI).rotate(Math.PI/2),2,1)

.layer(

// ---goes inside heart

shape(3,0.5,-0.4).blend(src(o0).kaleid(3))

.color(0.82,0.53,0.66).modulatePixelate(o0,7,4)

.mask(

// ---heart

shape(5,0.5,0).color(1.8,0.87,1)

.modulateRotate(o0,4,0)

.modulateScale(src(o0),[0.6,0.4].smooth(),0.8)

))

.out(o2);

render(o2)

My biggest gripe is the barrier to entry. It feels like in order to get a lot of mileage out of Hydra, I need both enough RAM and enough knowledge of JavaScript to create more intricate visuals. Passed Recordings on YouTube has a great video [insert] demonstrating that you don’t need to be super familiar with the code under the hood to use Hydra effectively, but making music visualizers feels like an accessible exception rather than the rule. I don’t know, one of these days I’ll make a waving skull and crossbones for a “Pirate Jenny” music video, but until then it might just collect dust in my bookmarks.

-Rafi

Drawing, Moving, and Seeing with Code Devlogs

| Status | In development |

| Category | Other |

| Author | rafi.pdf |

More posts

- DMSC Devlog: Week 6 & 7 - Climate ChangeApr 30, 2025

- DMSC Devlog: Week 5 - Special Delivery!Apr 23, 2025

- DMSC Devlog: Week 4 - Random SeedingApr 12, 2025

- DMSC Devlog: Week 3 - Class WorkFeb 18, 2025

- DMSC Devlog: Week 2 - Made for WalkingFeb 11, 2025

Leave a comment

Log in with itch.io to leave a comment.