DMSC Devlog: Week 4 - Random Seeding

It's been a while since I posted a devlog, but I've got a lot of backlogged work to document here. This is from a February project assigned to us by a guest speaker, Jonah Senzel. Jonah Senzel, who writes music for Daniel Mullins, developer of indie hit Inscryption? As a roguelike-deckbuilder enjoyer and someone who watched Markiplier in 2016, this was a big day for me.

After learning about his independent work (find it here!), we started exploring entropy: how it manifests in the real world, how computers simulate it, and how to leverage that imperfect simulation in p5.js. Inspired by Brian Eno's Oblique Strategies, we were tasked with creating a sketch that randomly generates something daily, using the "randomSeed()" function to guarantee the same generation on any computer. To convert the date into a number that could be fed into randomSeed(), and then subdue issues with p5.js's random number generation, we collectively (with immense help from our professor) settled on this:

var date = new Date();

date = date.toJSON().slice(0, 10).split`-`.join``;

let ourSeed = int(date);

randomSeed(ourSeed);

// p5.js randomness seems to be less random between steadily incrementing randomSeeds

// so we'll generate some random numbers to "filter" our random output later

for(let i=0;i<10000;i++) {

let num = random(0,100);

}

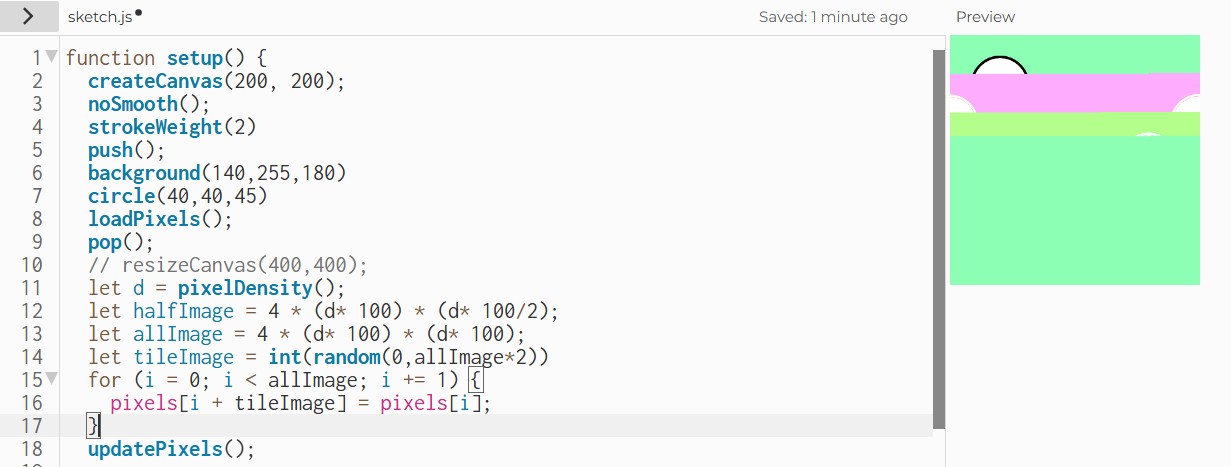

My original idea for a daily generation was a random filtered photo with a randomly generated "introspective" caption. I was pulling from overly-Photoshopped vaporwave and Frutiger Aero edits, InspiroBot's inspirational posters, and projects I had done in Processing for a previous computer science class. What I didn't realize was that each index of Processing's pixels[] array stores a color, while in p5.js each one stores an RBGA channel, making the array four times bigger and requiring four indices to determine the color of each pixel.

I got this far relearning the math, experimenting with how loadPixels() works, and attempting to tile the canvas before realizing the library includes its own commands for masking, blending, and filtering images. This killed my motivation to continue and I scrapped the sketch.

As I started to grind through late work, I returned to the randomSeed() project with a "daily Amen break," which would randomly chop the Amen break into a looping beat I could use for inspiration later. I recorded the break in Audacity, divided the duration into 16 beats in p5.js, and saved the timestamp of each beat in an index of an array as a "chop". Playing the timestamps in order replays the original break, while shuffling the array at the start of the sketch plays a random remix of it. The break's beats are rhythmic enough to sound decent in any order, especially with some tweaks to their pitch and playback speed. (See it here.)

let sound, soundLoop, timeInterval;

let amenbreak = [];

let beat = 0;

let numberOfBeats = 16;

function preload() {

sound = loadSound('amen break chop.mp3');

}

function setup() {

let cnv = createCanvas(250, 200);

timeInterval = sound.duration() / numberOfBeats;

soundLoop = new p5.SoundLoop(onSoundLoop, timeInterval)

for (i=0;i<numberOfBeats;i++) {

amenbreak[i] = i*timeInterval;

}

sound.playMode("restart");

// random shuffle every day

var date = new Date();

date = date.toJSON().slice(0, 10).split`-`.join``;

let ourSeed = int(date);

randomSeed(ourSeed);

for(let i=0;i<10000;i++) {

let num = random(0,100);

}

shuffle(amenbreak, true);

cnv.mousePressed(canvasPressed);

}

function draw() {

background(220);

if (sound.isPlaying() == false) {

text("Click to play today's Amen break!",10,70)

} else {

text("Click to pause today's Amen break!",10,70)

}

text("Timestamp: "+sound.currentTime(),10,120);

for (i=0;i<beat+1;i++) {

text(i,i*15+11,140);

}

}

function canvasPressed() {

userStartAudio();

if (soundLoop.isPlaying) {

sound.stop();

soundLoop.stop();

} else {

soundLoop.start();

}

}

function onSoundLoop() {

beat = (soundLoop.iterations - 1) % numberOfBeats;

// change parameter 2 for pitch/speed, parameter 4 for start time,

// and parameter 5 for chop duration

sound.play(0,0.94,1,amenbreak[beat],timeInterval);

// uncomment to turn looping off

// if (beat == 0 && soundLoop.iterations > 1) {

// sound.stop();

// soundLoop.stop();

// }

}

It's a little clunky - p5.soundLoops tend to loop a bit irregularly, but I was having trouble implementing accurate timers in draw() and found this more convenient than using soundFile.jump() to switch between chops. (Mister Bomb on YouTube has a very elegant solution in his audio file sample selector, using the first parameter of play() to string chops together in advance.) That said, it should be easy to stick different drum breaks into the sketch if I ever get sick of this one, which I really appreciate. At this point, I'm happy to have something that works.

-Rafi

Drawing, Moving, and Seeing with Code Devlogs

| Status | In development |

| Category | Other |

| Author | rafi.pdf |

More posts

- DMSC Devlog: Week 8 - Video SynthesisJun 03, 2025

- DMSC Devlog: Week 6 & 7 - Climate ChangeApr 30, 2025

- DMSC Devlog: Week 5 - Special Delivery!Apr 23, 2025

- DMSC Devlog: Week 3 - Class WorkFeb 18, 2025

- DMSC Devlog: Week 2 - Made for WalkingFeb 11, 2025

Leave a comment

Log in with itch.io to leave a comment.